1. Our Team

We are a team of ambitious students pursuing a master degree in Canada. From the same French engineering school IMT Atlantique, we are used to collaborate to accomplish great work. We proudly reached 4th place of Phase I. The integrality of our work is available here.

Gilles Schneider

Computer Science and Engineering student, Montreal. Technology enthusiast. Chef. Cinephile. Photographer (but less than Hugo).

Hugo Kermabon

Research assistant in Cybersecurity, Montreal. Photographer. CTF Challenger. Chef (but less than Gilles).

2. Motivations

The SARS-CoV-2 2019 (COVID-19) current infection or past infection can be respectively detected by a viral test and an antibody test. However, artificial intelligence could become handy to help researchers diagnose COVID-10 with X-ray images, accelerating the treatment of people infected and saving lives. We faced a major limitation during Phase I: the number of detected positive cases in the evaluation set was very low. This issue led us to aggregate results from two networks that had the same architecture but different weights. The two networks were trained on the same positive images but different negative images. The results were satisfying but the approach was not rigorous enough. We had an idea in mind. What if we could train a set of small networks on different data, and merge the predictions using another network? Our next contribution to the fight against COVID-19 is based on collective intelligence.

3. Swarm Deep-Learning

The current section introduces the key ideas of the Swarm Deep-Learning and the model that we want to develop. Therefore, it does not aim at going into great technical details.

3.1 Analogy

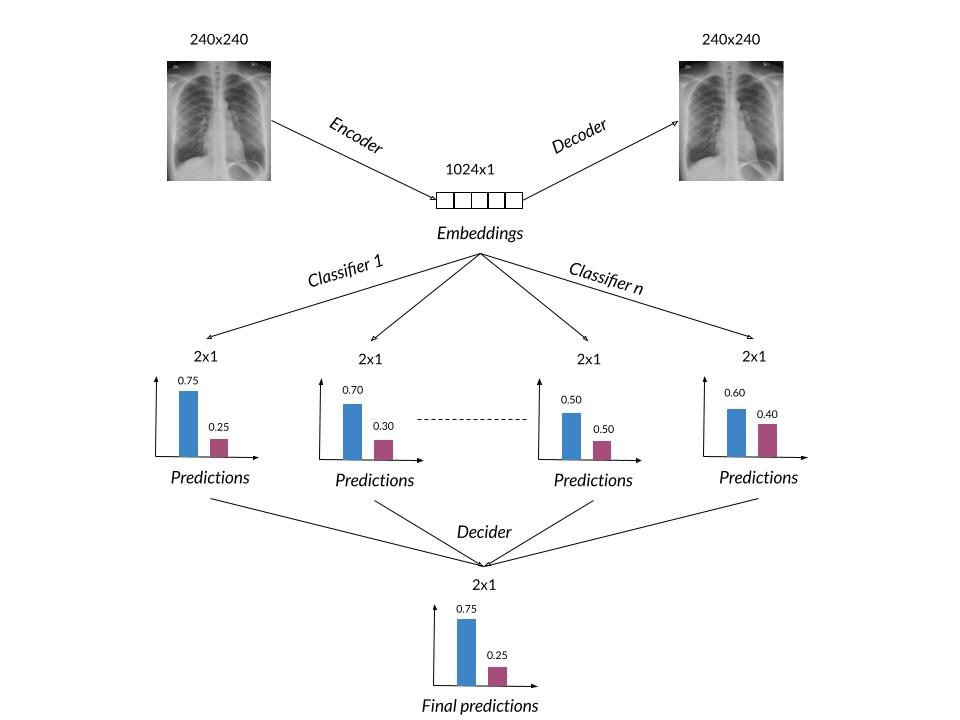

We initialize a set (swarm) of small identical classifiers (bees) and a final decider (queen). The queen uses the predictions of the bees to make the final predictions.

3.2 Data encoding

In order to use small classifiers as bees, we need to encode Chest X-Rays, hence reducing dimensionality. We train an encoder-decoder (U-NET) to reproduce input images OR segment the lungs. The encoder-decoder generates embeddings of the input images in lower dimensionality. When the training is completed, we freeze its weights and keep the encoder.

3.4 Training the Swarm 🐝

We stratify the dataset and assign each bee with a dataset stratum. The stratum are disjoint in terms of negative cases but they can have positive cases in common. Every bee is connected to the encoder’s output and fine-tuned on the embeddings of its corresponding dataset stratum. After all the bees have been trained, we freeze their weights.

3.5 Training the Queen 👑

We connect the last layer of model, the final decider (queen), to the outputs of the bees. We fine-tune the queen on the whole dataset. It learns to make predictions based on the swarm predictions. In the swarm, there is always a bee that leads the predictions because it has already seen the input image during the training of the swarm.

The model is shown in Figure 1.

Figure 1: From top to bottom: Encoder-decoder. Swarm (set of n classifiers). Queen (decider).

4. Discussion

The advantages and limitations drawn below are hypothetical as we have not tested the model yet. We have not found similar approaches in the scientific community. It is a new way that we wish to explore. We can either discover that the approach is efficient or not. In all cases we will explain the reasons why Swarm Deep-Learning is efficient or not.

4.1 Advantages

Here is a non-exhaustive list of advantages of this new approach over our first approach:

1. The aggregation will be done by a neural network (queen) and will no longer rely on a deterministic formula. For instance, taking the majority (>50%).

2. The swarm will use the whole dataset. Only 14% of random negative images were previously used for each training of phase 1. Furthermore, every bee will create its own representations of the COVID-19 detection problem. In fact, a given bee will at least be trained on its own negative cases.

3. The swarm gives exploitable statistics of the predictions (mean, standard deviation, repartition, etc.). That could be a powerful tool for practitioners. We can study the swarm by modifying the number of bees or we can assign specific data to each bee, making them variant-specific for instance.

4. Using lungs segmentation to generate embeddings allows the bees to target more the areas of a possible infection.

4.2 Limitations

Here is a non-exhaustive list of limitations of this new approach over our first approach:

1. The bees might not deep enough to achieve good performance. That might lead us to make them deeper, thus increasing memory footprint. Designing bees will not be an easy task.

2. The embeddings generated by the encoder-decoder may not be relevant to be used in a classification task. Furthermore, each layer (encoder, swarm, queen) introduces error to the original data.

4. Training the swarm might be time-consuming.